Early Performance Testing at Agile 2015

I enjoyed Eric Proegler’s session on Performance Testing in Agile Contexts [1] at Agile 2015. To get my technical fix, I split time between the development & software craftsmanship track and the testing & quality track. I wish these weren’t two separate tracks. I’d like to see more testers attending dev sessions and more devs at testing & quality sessions. Quality is a whole team effort; it helps if we can share our perspectives and expertise about what it takes to design, implement, test, and deploy quality software.

Last year Eric wrote a paper on early performance testing which he presented at the 2014 Pacific Northwest Software Quality Conference. It covers most if not all of the points in his Agile 2015 talk in more depth. In it he challenges us to do performance testing earlier, when software is in state of flux and there’s an opportunity to notice performance glitches and improve them while we still remember what’s recently changed in our software and its environment:

“Most people still see performance testing as a single experiment, run against a completely assembled, code-frozen, production-resourced system, with the "accuracy" of simulation and environment considered critical to the value of the data the test provides. But what can we do to provide actionable and timely information about performance and reliability when the software is not complete, when the system is not yet assembled, or when the software will be deployed in more than one environment?”

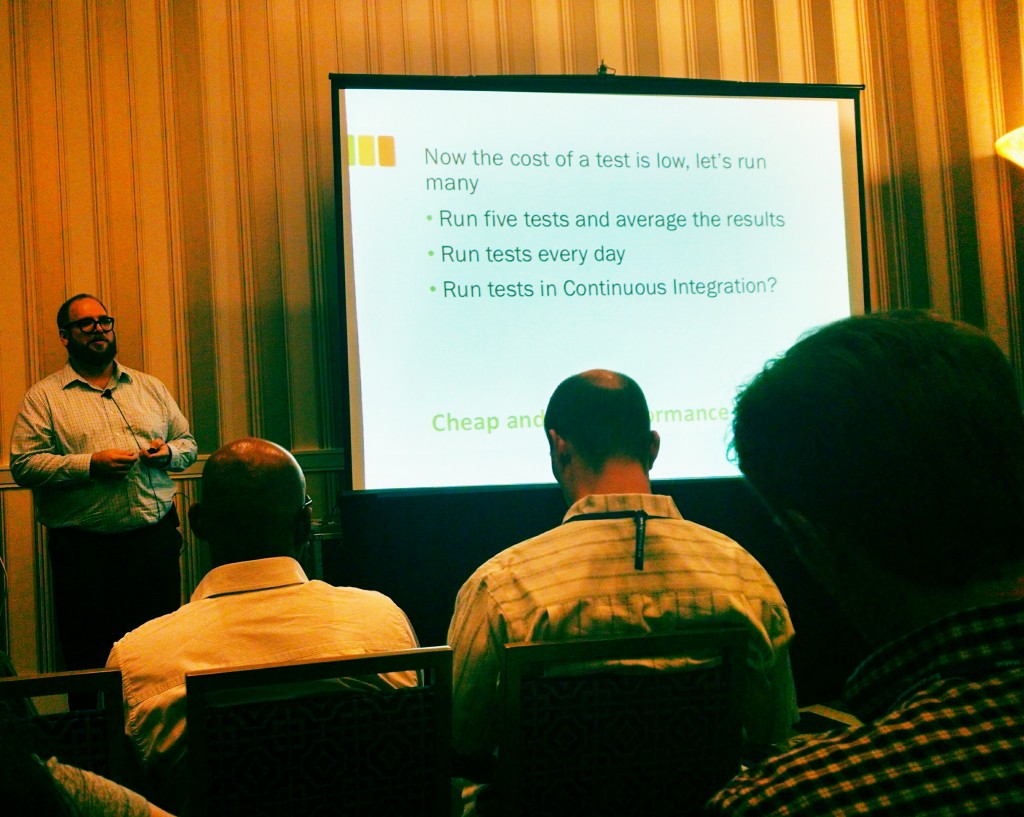

Early performance testing helps detect critical performance issues before they become show-stoppers. Run frequently, simple to-the-point performance tests exercise common pathways through software. Eric calls these “calibration tests”:

“The concept I’d like to introduce here is Calibration – between builds, between environments, and between changed configurations. It is more important to track improvement and degradation as the project proceeds, and less important to attempt a specific projection of production experience.”

A calibration test should be simple to write, inexpensive to run (not requiring near-production realism and fidelity). Running them regularly gives you information about how recent software changes have affected performance. Unless you are regularly measuring performance, you won’t notice when it degrades. What’s a good calibration test? Something that exercises common pathways. Two common calibration tests for a web-facing transactional system might be measuring the time it takes for session login or time to authorize the most common user business transaction.

For the past several years I’ve been on a crusade to raise awareness about system qualities and introduce simple practices and techniques to agile teams for specifying, measuring, monitoring, and delivering on the non-functional (or system qualities). This year I introduced a new workshop, Being Agile About System Qualities. I’ve blogged and spoken at conferences about simple, powerful techniques: agile quality scenarios, adding quality-related acceptance criteria to user stories, and specifying and monitoring quality characteristics using Agile Landing zones, among others. I’m in the middle of writing a pattern collection about Shifting from Quality Assurance to Agile Quality with longtime collaborator Joe Yoder.

Calibration tests is another important technique I’m going to add to my toolkit and add to our collection of quality-related patterns.

Intentionally incrementally delivering on system qualities requires awareness, application of practical tactical techniques, and ongoing attention. I'm always on the lookout for more practical techniques for measuring, testing, checking, specifying, and delivering on system qualities on agile projects.

This links to a similar talk at a different venue. ↩︎

- Previous: Shift Left: Testing Earlier in Development

- Next: Digging In